我的学习笔记

土猛的员外

通过5个参数控制RAG在不同场景下的准确度

昨晚和小明在讨论elasticsearch的检索,整整写了三黑板。主要原因是elasticsearch的检索有knn(其实是ann,前面文章有讲过)和bm25两种,如何在不同的场景(针对不同客户)设置不同的boost比例就变得非常重要。昨晚讨论的最终结果是在针对不同的客户(租户)都分别拉出五个参数,便于在面对不同客户场景时可以将检索准确度做到最佳。

可以简单展示一下其中的三个参数:

- boost:这是es自带的参数,取值0-1,一般是用来控制混合检索中BM25和KNN的分值占比的。我们内部会写成

boost * BM25_SCORE+(1-boost) * KNN_SCORE。也就是说,boost=1,那么就完全用BM25的得分来排序了,以此类推; - kms:kNN_min_score,取值0-1,这是用来设置重排之后的knn得分最小值,低于这个值,我们认为RAG检索无召回。比如kms=0.6,就是重排之后得分低于0.6的结果都不需要。如果所有召回的结果都低于0.6,那么就看下一个参数

f_llm; - f_llm:finally_llm,True | False,默认是False。如果设置成True,那么在某次检索中所有召回结果分值都低于kms的时候,系统会将用户的原始Query直接给到LLM。否则,系统会告诉用户,“据已知资料,无法回答您的问题!”

LLM企业应用调查报告——使用方式、问题和展望

看到O’Reilly的调查好文,翻译转发分享给大家。

本文的主要内容:

- 企业是如何使用生成式AI的?

- 在使用中遇到了哪些瓶颈?

- 企业希望生成式AI可以解决哪些缺陷和差距?

生成式AI是2023年最大的科技故事。几乎每个人都玩过ChatGPT、Stable Diffusion、GitHub Copilot或Midjourney。一些人甚至试用了Bard或Claude.ai,或者在他们的笔记本电脑上运行Llama(或Llama.cpp)。每个人都对这些语言模型和图像生成程序将如何改变工作的本质、迎来奇点、甚至可能毁灭人类有着自己的看法。在企业中,我们看到了从大规模采用,到严格限制,甚至禁止使用生成式AI的风向变化。

现实是什么?我们想知道人们到底在做什么,所以在9月份我们调查了O’Reilly的用户。我们的调查重点是:

- 企业是如何使用生成式AI的?

- 在使用中遇到了哪些瓶颈?

- 企业希望生成式AI可以解决哪些缺陷和差距?

调查报告主要内容

大模型商业应用的天王山之战——“消灭”LLM幻觉

本文主要内容:

大模型LLM为什么会有幻觉?

“消灭”幻觉的四个主要方法

幻觉如何检测

在之前我一篇文章比较受欢迎的文章《大模型主流应用RAG的介绍——从架构到技术细节》中提到了大语言模型(LLM)的主要缺点有三点:

- 幻觉问题:大模型的底层原理是基于概率,所以它有时候会一本正经胡说八道,比如我们问大模型的问答系统,“良渚博物院下周一开门吗?”我相信这样的问题你不能连续问,因为大模型会有一定的几率告诉你开门。而如果游客真的在下周一去了良渚博物院,那估计就要失望了,如果这个Chat还是博物院官方提供的,那事情最终会演变成一通12345的投诉电话。所以在很多需要非常精确的场景,仅仅依赖GPT的这种生成式回答是很不严谨的,而且看起来很难消除。

- 新鲜度问题:规模越大(参数越多、tokens越多),大模型训练的成本越高。类似OpenAI的ChatGPT3.5,目前的数据新鲜度依然保留在2021年,对于之后的事情就不知道了。而且对于一些高时效性的事情,大模型更加无能为力,比如帮我看看今天晚上有什么电影值得去看?这种任务是需要去淘票票、猫眼等网站先去获取最新电影信息的,大模型本身无法完成这个任务。

- 数据安全:OpenAI已经遭到过几次隐私数据的投诉,而对于企业来说,如果把自己的经营数据、合同文件等机密文件和数据上传到互联网上的大模型,那想想都可怕。如果企业人员想提一个类似这样的问题:“帮我看看3月份XX部门的销售环比数据与哪些兄弟部门的增长是密切相关的?”,这需要打穿企业内部的很多数据。既要保证安全,又要借助AI能力,那么最好的方式就是把数据全部放在本地,企业数据的业务计算全部在本地完成。而在线的大模型仅仅完成一个归纳的功能,甚至,LLM都可以完全本地化部署。

这三个问题中,“新鲜度问题”已经基本上被解决了,像GPT-4 Turbo这样的最新大模型已经有类似RAG(Retrieval Augmented Generation,检索增强生成)这样的技术,可以借助外挂快速吸收最新知识(世界知识)。剩下的两个问题,“数据安全”最好的解决方案当然是本地化,敏感数据不上公网才是最安全的。次之的解决方案是使用可信度更高的大模型厂商,他们肯定都有基本的职业操守。当然这不是本文主要讨论的问题。好了,剩下最棘手的就是大模型的“幻觉问题”了,所以今天我们主要来讲讲幻觉,以及如何“消灭”幻觉。

幻觉产生的原因

就大语言模型(LLM)本身来说,可能是永远无法消除“幻觉”的,就像Sam Altman说:“幻觉和创造性是等价的”。这个概念我似乎很早就理解了,因为就像生物进化一样,只有不稳定的随机性才能带来的多样性,而多样性才能让物种穿越周期不断进化。

用弹子球机解释LLM原理,包括损失函数和梯度下降

本文重点:

用弹子球机来展示大语言模型的一些内部原理

如何去调整参数,以达到我们想要的模型输出效果

今年5月份的时候我说国内真正使用过ChatGPT的人不超过5%,但是到了11月份,我再和企业、政府等的一些客户交流时,已经很难再碰到整个交谈过程中不说大语言模型(LLM)的了。但是说实话,大部分人对于LLM的了解还是很“新闻”化的,看到了现象但不达本质,往往造成了对LLM的“神化”。所以今天这篇文章,我希望用相对不那么技术化的描述来讲讲LLM的一些原理和概念,让大家对LLM有更近一步了解,也许对大家后面使用LLM及其应用有一定的帮助。

首先做个说明:本文不会讲太多数学公式,我会尽量保证非数学、统计专业的朋友可以看懂。

弹子球机

今年2月份我写过一篇关于GPT的文章《ChatGPT会给文旅行业带来什么改变》,里面提了一下ChatGPT的原理,如下图所示。

当时说的是假设我们有一个弹子球机器,把各种不同(颜色、重量、尺寸)的球从顶部扔进去,球会和里面的这些柱子(杯子上方的这些圈圈)相碰撞,最终掉下来,落进最下面的杯子里。我们期望机器可以做到”红色的球最终掉进红色的杯子,蓝色球掉进蓝色杯子,依次类推“,我们可以做的事情是调整机器里面的柱子(假设这些柱子表面是不规则的,而且我们可以旋转这些柱子)。

AI创业之路会被OpenAI堵死吗?

上周算是我正式离职创业的第一周,拜访客户、行业交流、选办公场地、置办办公设备等等,很多时间不在电脑面前,所以上周没更新任何文章。嗯,那就这周补上,发两篇!

今天这篇是上周本来就想写的,就是OpenAI DevDay(开发者大会)之后,基于大模型及相关的创业项目前景如何。

Rerank——RAG中百尺竿头更进一步的神器,从原理到解决方案

本文主要内容:

- 为什么一般情况下RAG的检索相关性存在问题?

- Rerank为什么可以解决这个问题?

- 几种常用Rerank组合评测;

- 如何在自己的产品中使用Rerank?

检索增强生成(RAG)是解决大语言模型(LLM)实际使用中的一套完整的技术,它可以有效解决LLM的三个主要问题:数据时效性、幻觉和数据安全问题(在我之前的文章《大模型主流应用RAG的介绍——从架构到技术细节》中有详细介绍)。但是随着RAG越来越火热,使用者越来越多,我们也会发现用的好的人/团队其实还是不多的。这也是RAG常被人吐槽的一点:入门简单,用好却非常难!

对于RAG的效果,我们之前已经做了很多方面的优化了,包括:

- 优化内容提取的方法:从源头解决内容提取的有效性,包括文本内容、表格内容(保留制表符)和图片内容(OCR识别)等,可以参看我之前的文章《完全指南——使用python提取PDF中的文本信息(包括表格和图片OCR)》;

- 优化chunking:从最开始的512固定长度切分,到后面的句切分,再到后面的NLTK和SpaCy,具体可参见我之前写的《最详细的文本分块(Chunking)方法——可以直接影响基于LLM应用效果》;

- 再之后是优化embedding模型:Embedding模型的选择其实很魔性,我们在优化过程中也会不断否定之前的一些判断。比如我们最开始用m3e,后面用bge,再后面还用了通义千问的embedding模型。总体来说,收费的通义千问还是好一些,但是不明显,有些方面却不如bge。最近一朋友也向我推荐了Jina embedding模型,不过他们的中文模型需要12月份才出来;

- 我们还优化了其他一些过程:比如prompt模板、关键词摘要、元数据存储等。

这些优化确实给我们带来了非常好的效果,但不够!我们在一些客户的实践过程中,还是发现相关性效果不佳,甚至造成了其中一个客户选择了其他方案(使用RAG+GPT-4的方案)。

我们还是坚持用国产大模型(如Baichuan2-13B、ChatGLM3-6B和QWen-14B等),毕竟主要服务的还是国内客户,加上现在接触的多数客户其实都有私有化部署的需求。所以我们进行了一段时间的探索,发现我们还有一项很有效的优化没有去做——ReRank。

所以,虽然Rerank优化我们还在做,但是今天我们可以先聊聊ReRank这个话题。

为什么需要Rerank

我们发现,在10月中旬之前,国内外的互联网上很难发现Rerank相关的话题。有少量人提到了,但是基本上都没有提到解决方案。我和小明在讨论Rerank的时候其实是先从提问题开始的。

提升RAG——选择最佳Embedding和重新排名模型

在构建检索增强生成(RAG)管道时,一个关键组件是Retriver。我们有各种各样的Embedding模型可供选择,包括OpenAI、CohereAI和开源的Sentence-Transformers。此外,CohereAI和Sentence-Transformers也提供了一些重新排序器。

但是有了这些选项,我们如何确定最佳组合以获得一流的检索性能呢?我们如何知道哪种Embedding模型最适合我们的数据?或者哪个重新排名对我们的结果提升最大?

在这篇博文中,我们将使用LlamaIndex的检索评估(Retrieval Evaluation)模块来快速确定Embedding和重新排名模型的最佳组合。让我们开始吧!

让我们首先从理解检索评估(Retrieval Evaluation)中可用的度量标准开始。

理解检索评价中的度量标准

为了衡量我们的检索系统的有效性,我们主要依赖于两个被广泛接受的指标:命中率和**平均倒数排名(MRR)**。让我们深入研究这些指标,了解它们的重要性以及它们是如何运作的。

命中率:

Hit rate计算在前k个检索文档中找到正确答案的查询比例。简单来说,它是关于我们的系统在前几次猜测中正确的频率。

平均倒数排名(MRR):

对于每个查询,MRR通过查看排名最高的相关文档的排名来评估系统的准确性。具体来说,它是所有查询中这些秩的倒数的平均值。因此,如果第一个相关文档是顶部结果,则倒数排名为1;如果是第二个,倒数是1/2,以此类推。

现在我们已经确定了范围并熟悉了参数,是时候深入实验了。想要亲身体验,你也可以使用我们的谷歌Colab笔记本

设置环境

1 | !pip install llama-index sentence-transformers cohere anthropic voyageai protobuf pypdf |

设置各种key

1 | openai_api_key = 'YOUR OPENAI API KEY' |

下载数据

本次实验我们将使用Llama2论文吧。

1 | !wget --user-agent "Mozilla" "https://arxiv.org/pdf/2307.09288.pdf" -O "llama2.pdf" |

加载数据

让我们加载数据。我们将使用从第1页到第36页进行实验,不包括目录、参考文献和附录。

然后将该数据解析为节点,节点表示我们想要检索的数据块。我们确实使用chunk_size为512。

1 | documents = SimpleDirectoryReader(input_files=["llama2.pdf"]).load_data() |

生成问题-上下文对:

为了评估的目的,我们创建了一个问题-上下文对的数据集。这个数据集可以被看作是我们数据中的一组问题及其相应的上下文。为了消除评估Embedding(OpenAI/ CohereAI)和重新排序(CohereAI)的偏见,我们使用Anthropic LLM来生成问题-上下文对。

让我们初始化一个prompt模板来生成问题-上下文对。

1 | # Prompt to generate questions |

过滤句子的功能,比如— Here are 2 questions based on provided context

1 | # function to clean the dataset |

自定义检索:

为了识别最优的检索器,我们采用了Embedding模型和重新排序器的组合。首先,我们建立一个基本的VectorIndexRetriever。检索节点后,我们引入一个重新排序器来进一步优化结果。值得注意的是,在这个特殊的实验中,我们将similarity_top_k设置为10,并使用reranker选择top5。但是,您可以根据具体实验的需要随意调整此参数。我们在这里用OpenAIEmbedding显示代码,请参阅笔记本获取其他Embeddings的代码。

1 | embed_model = OpenAIEmbedding() |

评估

为了评估我们的检索器,我们计算了平均倒数排名(MRR)和命中率指标:

1 | retriever_evaluator = RetrieverEvaluator.from_metric_names( |

结果:

我们对各种Embedding模型和重新排序器进行了测试。以下是我们考虑的模型:

向量模型:

Rerank模型:

值得一提的是,这些结果为这个特定数据集和任务的性能提供了坚实的见解。但是,实际结果可能会根据数据特征、数据集大小和其他变量(如chunk_size、similarity_top_k等)而有所不同。

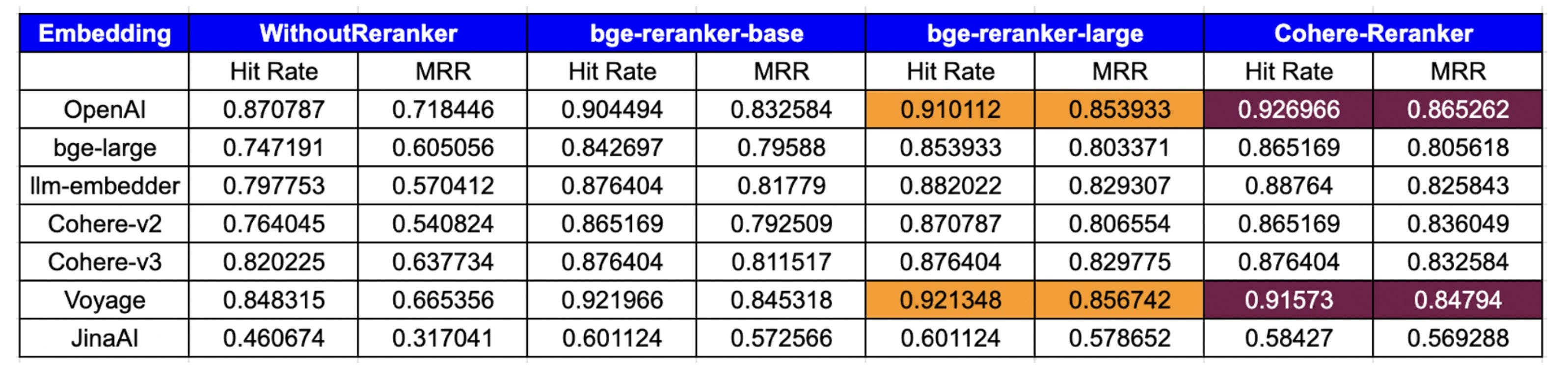

下表展示了基于命中率和平均倒数排名(MRR)指标的评估结果:

分析:

Embedding性能:

- OpenAI:表现出顶级的性能,特别是

cohererank(0.926966命中率,0.865262 MRR)和big-reranker-large(0.910112命中率,0.853993 MRR),表明与重排名工具的兼容性很强。 - big-large:在重新排序器上有了显著的改进,

CohereRerank的结果最好(0.865169命中率,0.805618 MRR)。 - llm-embedder:从重新排名中受益匪浅,特别是

CohereRerank(0.887640命中率,0.825843 MRR),它提供了实质性的性能提升。 - **coherhere **: coherhere最新的v3.0Embeddings优于v2.0,并且通过集成本地CohereRerank,显着提高了其指标,拥有0.876404命中率和0.832584 MRR。

- Voyage:具有较强的初始性能,并被

cohererank(0.915730命中率,0.847940 MRR)进一步放大,表明对重新排名的响应性较高。 - JinaAI:虽然起点较低,但

big -rerank -large的收益显著(命中率0.601124,MRR 0.578652),表明重新排名显著提升了它的性能。其性能不佳的一个潜在原因可能是Embedding针对8K上下文长度进行了优化。

重新排名的影响:

- **WithoutReranker **:为每个Embedding提供基准性能。

- bge-rerrank-base:通常可以提高Embeddings的命中率和MRR。

- bge-rerank-large:此rerank通常为Embeddings提供最高或接近最高的MRR。对于一些Embeddings,它的性能可以与

cohererank相媲美或超过。 - **Cohererank **:始终如一地提高所有Embeddings的性能,通常提供最好或接近最好的结果。

重新排序的必要性:

- 数据清楚地表明重新排名在优化搜索结果中的重要性。几乎所有的Embeddings都受益于重新排序,显示出更高的命中率和mrr

- 重新排序器,特别是

CohereRerank,已经证明了它们将平均表现的Embedding转化为具有竞争力的能力,正如JinaAI所看到的那样。

整体优势:

当考虑到命中率和MRR时,

OpenAI + CohereRerank和Voyage + big-reranker-large的组合成为最热门的竞争者。然而,

cohererank/big-reranker-largereranker在各种Embeddings中所带来的持续改善,使它们成为提高搜索质量的突出选择,无论使用的Embedding是什么。

综上所述,为了实现命中率和MRR的峰值性能,OpenAI或Voyage Embeddings与cohererank/big-reranker-largeReranker的组合脱颖而出。

结论:

在这篇博文中,我们演示了如何使用各种Embeddings和重新排序器来评估和提高检索器的性能。以下是我们的最终结论。

- Embeddings:

OpenAI和VoyageEmbeddings,特别是当与CohereRerank/big-reranker-largereranker配对时,为命中率和MRR设定了黄金标准。 - 重排器:重排器的影响,特别是

cohererank/big-reanker-large,怎么强调都不为过。它们在提高许多Embeddings的MRR方面发挥了关键作用,显示了它们在使搜索结果更好方面的重要性。 - 基础是关键:为初始搜索选择正确的Embedding是至关重要的;如果基本搜索结果不好,即使是最好的重新排名器也帮不上什么忙。

- **一起工作:**为了获得最好的寻回犬,找到Embeddings和重新排序的正确组合是很重要的。这项研究表明,仔细测试并找到最佳配对是多么重要。

原文:https://blog.llamaindex.ai/boosting-rag-picking-the-best-embedding-reranker-models-42d079022e83

像光速一样搜索——HNSW算法介绍

本文是一篇英文翻译转载文章,主要介绍了HNSW算法。

原文链接:https://dataman-ai.medium.com/search-like-light-speed-1-hnsw-c5b0d4665926

我喜欢《玩具总动员》里的太空护林员“巴斯光年”,我喜欢他的口头禅“飞向无限!” 当我搜索信息时,我也享受找到正确信息的速度。这一切都是因为高速互联网和足够的带宽吗?不完全是!事实上,近乎即时搜索结果的算法是至关重要的。信息检索速度是计算机科学中的一个重要课题。随着文本、图像或音频数据的大型语言模型(大语言模型)的高维Embeddings,信息检索的速度是数据科学中的一个优先课题。

在这篇文章中,我将讨论:

- NLP中的向量Embeddings

- KNN (K-Nearest Neighbors)无法跟上速度

- 近似最近邻(ANN)感觉就像光速

- 快速搜索的初始算法

- 理解分层导航小世界图算法(HNSW)

- 代码示例:Embedding新闻文章

- 代码示例:FAISS用于HNSW搜索

本文及其后续系列解释了使巴斯光年的梦想成为可能的最先进算法。您将对这一领域及其应用的重要性有一个景观理解。您将有动手编码示例。我们开始吧。

NLP中的向量Embeddings

向量Embeddings是自然语言处理(NLP)中的一个基本概念,是单词、句子、文档、图像、音频或视频数据等对象的数字表示。这些Embeddings的目的是捕获它们所表示的对象的语义和上下文信息。

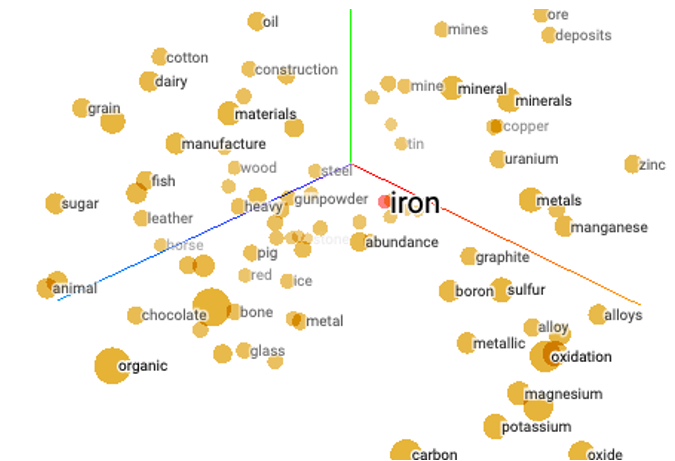

让我们首先描述一下单词Embeddings。2014年,一个突破性的想法Word2Vec(发音为“Word - to - Vector”)在自然语言处理中被提出,它将单词或短语转换或“嵌入”为数字的高维向量,称为单词Embeddings。这些词Embeddings捕捉词之间的语义和上下文关系,使机器能够理解和使用人类语言。图1显示了三维空间中的高维向量。“铁(iron)”这个词与“火药(gunpowder)”、“金属(metals)”和“钢(steel)”等词很接近,但与“有机(organic)”、“糖(sugar)”或“谷物(grain)”等不相关的词相去甚远。例如,猫和狗的概念可能很接近。

图 (1):文字Embeddings(图片来源:作者)

单词Embeddings可以实现单词的相似或不相似。这是一项了不起的创新。既然单词可以嵌入,为什么句子不能呢?这就是句子Embeddings的诞生。句子Embeddings捕获整个句子的语义和上下文信息,使机器能够理解和比较句子。生成句子Embeddings的常用方法包括Doc2Vec (Document-to-vector)。强大的基于llm的词Embeddings将成为NLP的标准,如BERT(来自Transformers的双向编码器表示)、ELMo(来自语言模型的Embeddings)、Llama(大型语言模型元AI,由Meta AI于2023年2月推出),以及OpenAI的多个模型。

既然文本可以作为向量嵌入,为什么不能嵌入图像呢?这就产生了图像Embeddings。卷积神经网络(cnn)和视觉几何组(VGG)用于生成图像Embeddings。图像Embeddings使图像检索和分类成为可能。

既然图像可以作为矢量嵌入,为什么不能嵌入音频呢?你说得对!音频Embeddings可以捕获音频数据的声学特征,并可以进行音频分类和检索。视频Embeddings如何?它们捕获图像特征流用于视频分类。那么地理空间Embeddings呢?当然可以。纬度和经度坐标等地理空间数据可以嵌入到高维向量中,便于信息检索。

Embeddings使一切变得简单。如果你有一篇文章,需要找到类似的文章,你只需要计算你的文章的向量到其他文章的向量之间的距离。最近的向量就是你的搜索结果。我们可以用k近邻法(KNN),对吧?然而,速度是个问题。KNN的搜索将使光年皱眉。对于巴斯光年来说,完成一次简单的搜索需要…需要不知道多少年。研究的挑战不是最近的邻居在哪里,而是“如何”找到它们。

k-最近邻(KNNs)无法跟上速度

假设你有一本新书,你想在图书馆找到类似的书。k-最近邻(KNN)将浏览书架上的每一本书,并将它们从最相似到最不相似的顺序排列,以确定最相似的书。你有耐心做这么麻烦的工作吗?相反,人工神经网络对图书馆中的图书进行预排序和索引。要找到与你的新书相似的书,你所需要做的就是去正确的楼层,正确的区域,正确的通道找到相似的书。此外,你通常不需要对前10本相似的书进行精确排名,比如100%、99%或95%的匹配度。这就是近似近邻(ANN)的思想。

让我们来了解一下为什么人工神经网络可以更有效地搜索。

近似最近邻(ANN)感觉像光速

ANN (Approximate Nearest Neighbors)对大数据进行预索引,方便快速搜索。在索引期间,构建数据结构以促进更快的查询。当您想为一个查询点找到近似的最近邻居时,您可以将该查询点提供给ANN算法。人工神经网络算法首先从数据集中识别一组可能接近查询点的候选数据点。使用预构建的数据结构选择候选对象。这一步骤大大减少了需要检查接近性的数据点的数量。在候选点被选中之前,ANN计算每个候选点与查询点之间的实际距离(如欧几里得距离、余弦相似度)。然后根据与查询点的距离/相似度对候选项进行排名。排名靠前的候选人作为近似近邻返回。在某些情况下,还可以设置距离阈值,只返回该阈值内的候选对象。人工神经网络背后的关键思想是,为了显著降低计算成本,它牺牲了找到绝对最近邻的保证。这些算法的目标是在计算效率和准确性之间取得平衡。

然而,在高维空间中,过去的实验表明ANN并不比KNN节省多少时间(见[4])。有几种创新的人工神经网络算法适用于高维空间。我将列出这些算法的字母表。您很快就会熟悉这些字母,并且可能更愿意在NLP社区中使用它们进行交流。让我们学习流行的最先进的算法。

最先进的快速搜索算法

这些不同的人工神经网络算法是不同的方法来形成数据结构,以实现有效的检索。有三种类型的算法:基于图的、基于哈希的和基于树的。

基于图的算法创建数据的图表示,其中每个数据点是一个节点,边表示数据点之间的接近性或相似性。最引人注目的是层次导航小世界图(HNSW)。

基于哈希的算法使用哈希函数将数据点映射到哈希码或桶。流行的算法包括:位置敏感哈希(LSH)、多索引哈希(MIH)和产品量化

基于树的算法将数据集划分为树状结构,以便快速搜索。流行的是kd树、球树和随机投影树(RP树)。对于低维空间(≤10),基于树的算法是非常有效的。

有几个流行的代码库:

- Scikit-learn:它的

NearestNeighbors类提供了一个简单的接口,可以使用LSH等技术进行精确和近似的最近邻搜索。 - Hnswlib:它是HNSW的Python包装器。

- FAISS:该库支持多种ANN算法,包括HNSW, IVFADC(带ADC量化的倒置文件)和IVFPQ(带产品量化的倒置文件)。

- Annoy (Approximate Nearest Neighbors Oh Yeah):Annoy是一个c++库,也提供了Python接口。

- NMSLIB(非度量空间库):它是用c++编写的,并具有Python包装器。它可以执行HNSW、LSH、MIH或随机投影树等算法。

使用上述代码库,您可以超级快速地执行搜索查询。您还需要了解其他库的变体。这里我只提到其中的三个。第一个是PyNNDescent。PyNNDescent是一个Python库,用于基于NN-descent的搜索算法,它是LSH的一个变体。第二个是NearPy。它支持多个距离度量和哈希族。第三个是PyKDTree。PyKDTree是一个Python库,用于基于kd树的最近邻(KNN)搜索。虽然kd树更常用于精确搜索,但PyKDTree也可以通过一些启发式优化用于近似搜索。

此外,如果您询问哪些算法和库执行速度最好,您只需要了解**ANN- benchmark **库,专门为对人工神经网络搜索算法进行基准测试而设计。它提供了一个标准化的框架来评估算法,如Annoy, FLANN, scikit-learn (LSHForest, KDTree, BallTree), PANNS, NearPy, KGraph, NMSLIB(非度量空间库),hnswlib, RPForest, FAISS, nndescent, PyNNDescent等等。

这不仅仅是最近邻在哪里,而是如何有效地找到他们。让我们学习第一个算法——HNSW。HNSW通常可以在几毫秒内从数百万个数据点中找到最近邻。

了解分层导航小世界图(HNSW)

HNSW是一种用于在高维空间中进行高效人工神经网络搜索的数据结构和算法。它是跳表和小世界图(SWG)结构的扩展,可以有效地找到近似的最近邻。如果我们先学习跳表和小世界图,学习HNSW就会很简单。

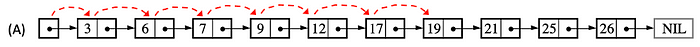

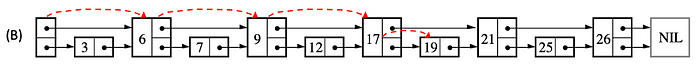

跳表是一种数据结构,用于维护一组已排序的元素,并允许进行高效的搜索、插入和删除操作。它是由William Pugh在1989年发明的。图(2)显示了数字[3、6、7、9、12、17、19、21、25、26]的排序链表。假设我们想找到目标19。当值小于目标时,我们向右移动。需要6步才能找到它。

图 (2): 排序链表

现在,如果列表的每个其他节点都有一个指向前面节点2的指针,如图3所示,可以将这些指针视为“高速公路”。数学规则是“当数值小于目标时向右移动”。需要4个步骤才能达到19。

图 (3): 跳表,其指针指向后面两个节点

这些高速公路加快了搜索速度。我们可以增加更多。现在,如果列表中每三个其他节点都有一个指向前面第三个节点的指针,如图(4)所示,那么只需要3步就可以到达19。

你可能会问,如何选择这些点作为”高速公路“?它们可以是预先确定的或随机选择的。这些节点的随机选择是Small World和NHSW中数据构建的重要步骤,我将在后面介绍。

图 (4): 跳表再升级,指向后面三个节点的指针

由跳表的思路延伸到Small World,我们来看看是怎么做的。

由跳表的思路延伸到Small World

小世界(small world)网络是一种特殊的网络,在这种网络中,你可以快速地联系到网络中的其他人或点。这有点像“凯文·培根的六度”(Six Degrees of Kevin Bacon)游戏,在这个游戏中,你可以通过一系列其他演员,在不到六个步骤的时间里,将任何演员与凯文·培根联系起来。

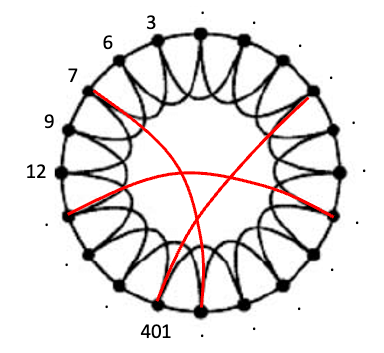

想象一下,你有一群朋友排成一个圆圈,如图5所示。每个朋友都与坐在他们旁边的人直接相连。我们称它为“原始圆”。

现在,这就是奇迹发生的地方。你可以随机选择将其中一些连接改变给圆圈中的其他人,就像图5中的红色连接线一样。这就像这些连接的“抢椅子”游戏。有人跳到另一把椅子上的几率用概率p表示。如果p很小,移动的人就不多,网络看起来就很像原来的圆圈。但如果p很大,很多人就会跳来跳去,网络就会变得有点混乱。当您选择正确的p值(不太小也不太大)时,红色连接是最优的。网络变成了一个小世界网络。你可以很快地从一个朋友转到另一个朋友(这就是“小世界”的特点)。

图 (5): small-world网络

现在让我们学习从小世界网络到可导航小世界的过渡。

从小世界到HNSW

现在我们要扩展到高维空间。图中的每个节点都是一个高维向量。在高维空间中,搜索速度会变慢。这是不可避免的“维度的诅咒”。HNSW是一种高级数据结构,用于优化高维空间中的相似性搜索。

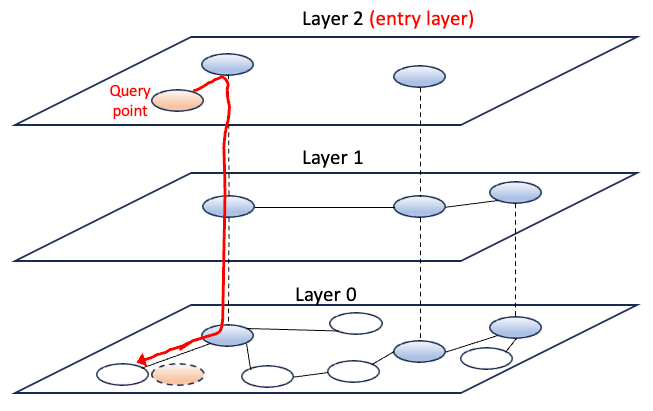

让我们看看HNSW如何构建图的层次结构。HNSW从图(6)中的第0层这样的基础图开始。它通常使用随机初始化数据点来构建。

图 (6): HNSW

HNSW在层次结构中的基础层之上构造附加层。每个层将有更少的顶点和边的数量。可以把高层中的顶点看作是跳跃列表中访问“高速公路”的点。你也可以将这些顶点视为游戏“Six Degrees of Kevin Bacon”中的演员Kevin Bacon,其他顶点可以在不到6步的时间内连接到他。

一旦构建了上面的层次结构,数据点就被编入索引,并准备进行查询搜索。假设查询点是桃色数据点。为了找到一个近似最近的邻居,HNSW从入门级(第2层)开始,并通过层次结构向下遍历以找到最近的顶点。在遍历的每一步,算法检查从查询点到当前节点邻居的距离,然后选择距离最小的相邻节点作为下一个基本节点。查询点到最近邻居之间的距离是常用的度量,如欧几里得距离或余弦相似度。当满足某个停止条件(例如距离计算次数)时,搜索终止。

现在让我们看看HNSW是如何构建这些层的。

HNSW如何构建数据结构?

HNSW首先初始化一个空图作为数据结构的基础。该图表示一个接一个插入数据点的空间。HNSW将数据点组织成多层。每一层表示数据结构中不同级别的粒度。层数是预定义的,通常取决于数据的特征。

每个数据点随机分配到一个层。最高的一层用于最粗略的表示,随着层的向下移动,表示变得更精细。这个任务是用一个特定的概率分布来完成的,这个概率分布叫做指数衰减概率分布。这种分布使得数据点到达更高层的可能性大大降低。如果你还记得跳跃列表中随机选择的数据点作为“高速公路”,这里的一些数据点是随机选择到最高层的。在后面的代码示例中,我们将看到每层中的数据点数量,并且数据点的数量在更高层中呈指数级减少。

为了在每一层内有效地构建连接,HNSW使用贪婪搜索算法。它从顶层开始,试图将每个数据点连接到同一层内最近的邻居。一旦建立了一层中的连接,HNSW将使用连接点作为搜索的起点继续向下扩展到下一层。构建过程一直持续到处理完所有层,并且完全构建了数据结构。

让我们简单总结一下HNSW中数据结构的构造。让我也参考Malkov和Yashunin[3]中的符号,并在附录中解释HNSW算法。您可能会发现它们有助于更明确地理解HNSW的算法。HNSW声明一个空结构并逐个插入数据元素。它保持每个数据点每层最多有M个连接的属性,并且每个数据点的连接总数不超过最大值(Mmax)。在每一层中,HNSW找到与新数据点最近的K个邻居。然后,它根据距离更新候选数据点集和找到的最近邻居列表(W)。如果W中的数据点数量超过了动态候选列表(ef)的大小,则该函数从W中删除最远的数据点。

接下来,我将向您展示代码示例。该笔记本可通过此链接获得。

代码示例

接下来,让我们使用库FAISS执行HNSW搜索。我将使用NLP中包含新闻文章的流行数据集。然后,我使用“SentenceTransformer”执行Embeddings。然后,我将向您展示如何使用HNSW通过查询搜索类似的文章。

Data

1 | import pandas as pd |

输出形状为(120000,3),列为[‘ Class Index ‘, ‘ Title ‘, ‘ Description ‘]。我们对“描述”栏感兴趣。以下是排名前五的记录。

- 路透社——卖空者,华尔街日益减少的\band极端愤世嫉俗者,又看到了绿色

- 路透——私人投资公司凯雷投资集团(\which)以在国防工业投资时机恰当、偶尔引发争议而闻名,该公司已悄然将赌注押在了市场的另一个领域

- 路透社——油价飙升,加上对\about经济和盈利前景的担忧,预计将在下周\summer经济低迷的深度\hang拖累股市

- 路透社——一位石油官员周六表示,在\intelligence显示反叛民兵可能袭击\infrastructure后,当局已经停止了伊拉克南部主要管道\flows的石油出口

- 法新社——在距离美国总统大选仅剩三个月的时间里,世界油价不断刷新纪录,人们的钱包越来越紧,这给经济带来了新的威胁

数据嵌入

出于说明的目的,我只使用10,000条记录进行Embeddings。

1 | sentences = train['Description'][0:10000] |

您需要pip安装“sentence_transformers”库。

1 | !pip install sentence_transformers |

然后让我们使用预训练模型“bert-base-nli-mean-tokens”来声明模型。在本页上有许多预先训练好的模型。

1 | model = SentenceTransformer('bert-base-nli-mean-tokens') |

然后我们将“句子”编码为“sentence_embeddings”。

1 | sentence_embeddings = model.encode(sentences) |

输出是10,000个列表。每个列表或向量的维数为768。下面是前5个Embeddings的输出。

array([[-0.26105028, 0.8585296 , 0.03941074, …, 1.0689917 , 1.1770816 , -0.74388623], [-0.2222097 , -0.03594436, 0.5209106 , …, 0.15727971, -0.3867779 , 0.49948674], [-0.3001758 , -0.41582862, 0.86036515, …, -0.6246218 , 0.52692914, -0.36817163], [ 0.3295024 , 0.22334357, 0.30229023, …, -0.41823167, 0.01728885, -0.05920589], [-0.22277102, 0.7840586 , 0.2004052 , …, -0.9121561 , 0.2918987 , -0.12284964]], dtype=float32)

这有助于保存Embeddings以备将来使用。

1 | with open(path + '/AG_news.npy', 'wb') as file: |

在上面的代码中,我使用了“npy”文件扩展名,这是NumPy数组文件的常规扩展名。下面是加载数据的代码:

1 | with open (path + '/AG_news.npy', 'rb') as f: |

有了这些Embeddings,我们就可以在HNSW数据结构中组织它们了。

使用FAISS构建NHSW数据结构索引

您需要像下面这样pip安装FAISS库:

1 | !pip install faiss-cpu --no-cache |

我将使用HNSWFlat(dim, m)类来构建HNSW。它需要预先确定的参数dim表示向量的维数,m表示数据元素与其他元素连接的边数。

1 | import faiss |

如前所述,HNSW指数的创建分为两个不同的阶段。在初始阶段,该算法采用概率分布来预测引入新数据节点的最上层。在接下来的阶段,收集每个数据点的最近邻居,然后用一个表示为m的值进行修剪(在我们的例子中是m=16)。整个过程是迭代的,从插入层开始,一直到底层。

HNSW中有两个重要参数“efConstruction”和“efSearch”。这两个参数控制着索引结构构建的效率和有效性。它们帮助您在HNSW索引结构中的索引构建和最近邻搜索操作的速度和质量之间取得平衡。

- efConstruction:该参数用于HNSW索引的构建。它控制了构建索引结构的速度和结构质量之间的权衡。” efConstruction “值决定了在构建阶段要考虑多少候选项目。较高的“efConstruction”值将产生更准确的索引结构,但也会使构建过程变慢。

- efSearch:该参数用于在HNSW索引中查找查询点的最近邻居。“efSearch”值控制搜索速度和搜索质量之间的权衡。较高的“efSearch”值将导致更准确和详尽的搜索,但也会更慢。我们将“efConstruction”和“efSearch”分别设置为40和16:

1 | index.hnsw.efConstruction = 40 |

我们已经声明了上面的数据结构。现在我们准备将数据“sentence_embeddings”一个接一个地插入到数据结构中:

1 | index.add(sentence_embeddings) |

一旦完成,我们可以检查HNSW数据结构中有多少数据元素:

1 | index.ntotal |

输出为10000。它是“sentence_embeddings”中的数据点数。接下来,HNSW建造了多少层?让我们来检查最大级别:

1 | # the HNSW index starts with no levels |

最高级别为2.0。这意味着有第0层,第1层和第2层。接下来,您可能想知道每层中数据元素的数量。让我们来看看:

1 | levels = faiss.vector_to_array(index.hnsw.levels) |

输出为array([0,9713,280,7])。“0”没有意义,你可以忽略它。它说第0层有9713个数据元素,第1层有280个元素,第2层只有7个元素。注意,9713 + 280 + 7 = 10000。您是否发现,较高层的数据元素数量比前几层呈指数级减少?这是因为数据元素的层分配采用指数衰减概率分布。

FAISS为HNSW搜索示例

假设我们的搜索查询是“经济繁荣与股市(economic booming and stock market)”。我们希望找到与我们的搜索查询相关的文章。我们将首先嵌入搜索查询:

1 | qry1 = model.encode(["economic booming and stock market"]) |

使用代码index.search(),搜索过程非常简单。这里k是最近邻居的个数。我们将其设置为5以返回5个邻居。index.search()函数返回两个值” d “和” I “。

- “d”:查询向量与k个最近邻居之间的距离列表。默认的距离度量是欧几里得距离。

- “I”:它是索引中k个最近邻居的位置对应的索引列表。这些索引可用于查找数据集中的实际数据点。

1 | %%time |

索引列表的输出是[[1467 4838 4464 7461 8299]]。我们将使用这些索引打印出搜索结果。

注意,我使用“%%time”来度量执行时间。它输出

*CPU时间:user: 5.57 ms, sys: 5µs, total: 5.58 ms

这意味着搜索只需要几毫秒。这确实是令人难以置信的快!

距离输出列表为:[[158.19066 163.69077 164.47517 164.64172 164.64172]]

1 | for i in I[0]: |

输出:

‘Rising oil prices are expected to hit China’s growth rate this year.’

‘Developing countries are starting to flex their financial muscles and invest overseas.

‘The Tehran Stock Exchange has performed magnificently, but the market’s list of risks is outsized.’

‘Federal Express raised its earnings forecast, citing strong demand for its international express, ground and less-than-truckload services.’

‘Federal Express raised its earnings forecast, citing strong demand for its international express, ground and less-than-truckload services.’ (Our data have duplications)

这些文章都是关于经济和股票市场的新闻。搜索速度以毫秒计非常快。这不仅仅是结果在哪里的问题,而是如何快速得到结果的问题,不是吗?

您可以通过此链接下载笔记本进行上述搜索。

总结

我希望这篇文章能帮助你理解近似近邻(ANN),以及它是如何提供高效搜索的。这篇文章解释了不同的人工神经网络算法,包括基于图的HNSW,基于哈希的LSH或产品量化,以及基于树的KD-Trees。这篇文章解释了HNSW如何构建其数据结构并逐个插入数据元素。本文演示了如何使用FAISS库构建用于查询搜索的HNSW。在下一篇文章“搜索像光速- (2)LSH,”中,我将讨论基于哈希的算法。

附录

在Malkov和Yashunin[3]的论文中,算法1到5伪代码中提供了HNSW方法。伪代码给出了算法的具体定义。我将这些描述添加到伪代码中,因为一些读者可能会发现它们有助于理解HNSW。算法1、算法2和算法3或算法4中的一个用于完成数据结构。一旦数据结构完成,以后的任何查询搜索都只使用算法5。

- 算法1:“INSERT”函数构建数据结构

- 算法2:“SEARCH-LAYER”函数计算KNN并存储邻居

- 算法3:“SEARCH-NEIGHBORS-SIMPLE”是一种选择邻居的简单方法

- 算法4:“SELECT-NEIGHBORS-HEURISTIC”函数是一种更复杂的选择邻居的方法

- 算法5:“KNN-SEARCH”函数进行查询搜索

让我们从算法1开始。

1 | Algorithm 1: INSERT() |

它在多层图中插入一个新元素q,保持每个元素每层最多有M个连接,并且每个元素的连接总数不超过Mmax的属性。该算法还保证连接元素之间的距离不大于某一最大距离,并且每层的连接数是均衡的。步骤如下:

- W←∅:初始化一个空列表W来存储当前找到的最近的元素。

- ep←get enter point for hnsw:获取多层图hnsw的进入点(即起始点)。

- L←ep的电平:获取进入点ep的电平。

- l←ln(unitif(0..1))∙mL⌋:为新元素q生成一个介于0和mL之间的随机级别,其中mL是级别生成的归一化因子。

- for lc←L…L +1:循环从L到L +1的层。

- W←SEARCH LAYER(q, ep, ef=1, lc):使用进入点ep和最大距离ef=1在lc层中搜索离q最近的元素。将找到的元素存储在列表W中。

- ep←取W到q最近的元素:取W到q最近的元素。

- for lc←min(L, L)…0:循环遍历从min(L, L)到0的层。

- W←SEARCH LAYER(q, ep, efConstruction, lc):使用进入点ep和最大距离efConstruction搜索层lc中离q最近的元素。将找到的元素存储在列表W中。

- neighbors←SELECT neighbors (q, W, M, lc):选择W到q最近的M个邻居,只考虑lc层的元素。

- 在lc层添加邻居到q的双向连接:在lc层添加q与所选邻居之间的双向连接。

- 对于每个e∈neighbors: //如果需要收缩连接

对于q的每个邻居e,检查e的连接数是否超过Mmax。如果是这样,使用SELECT neighbors (e, eConn, Mmax, lc)选择一组新的邻居来收缩e的连接,其中eConn是e在lc层的当前连接集。 - eNewConn←SELECT NEIGHBORS(e, eConn, Mmax, lc):为e选择一组新的邻居,只考虑lc层的元素,保证连接数不超过Mmax。

set neighborhood (e) at layer lc to eNewConn:将层lc的e的连接集更新为新的set eNewConn。ep <- W:设置hnsw的进入点为q。if 1 > L:将hnsw的起始点设为q,因为新元素q现在是图的一部分。return hnsw:返回更新后的多层图hnsw。

让我们看看算法2。

它在HNSW数据结构上执行K近邻搜索,以查找特定层lc中与查询元素q最近的K个元素。然后,它根据查询元素q与候选元素C和e之间的距离更新候选元素C的集合和找到的最近邻居列表W。最后,如果W中的元素数量超过了动态候选列表ef的大小,则该函数删除从W到q最远的元素。

1 | Algorithm 2: SEARCH-LAYER() |

以下是上述代码的步骤:

- 初始化变量v为当前的入口点ep。

- 初始化集合C为当前候选集合。

- 初始化一个空列表W来存储找到的最近邻。

- 循环直到候选集合C中的所有元素都求值为止。

- 从候选元素集合c中提取离查询元素q最近的元素c。

- 获取从找到的最近邻W到查询元素q的列表中最远的元素f。

- 如果c到q的距离大于f到q的距离:

- 然后打破这个循环。

- 对于lc层c邻域内的每个元素e:

- 如果e不在访问元素v的集合中,则:

- 将e添加到访问元素v的集合中。

- 设f为从W到q的最远的元素。

- 如果e和q之间的距离小于等于f和q之间的距离,或者W中的元素个数大于等于ef(动态候选列表的大小),则:

- 将候选集C更新为C∈e。

- 将发现的最近邻居W的列表更新为W∈e。

- 如果W中的元素个数大于等于ef,则:

- 移除从W到q的最远的元素。

- 返回找到的最近邻居W的列表。

算法3.

这是一个简单的最近邻选择算法,它接受一个基本元素q、一组候选元素C和一些邻居M作为输入。它返回候选元素C集合中离q最近的M个元素。

1 | Algorithm 3: SELECT-NEIGHBORS-SIMPLE() |

步骤如下:

- 初始化一个空集R来存储选中的邻居。

- 初始化一个工作队列W来存储候选元素。

- 如果设置了extendCandidates标志(即true),则通过将C中每个元素的邻居添加到队列W来扩展候选列表。

- 而W的大小大于0,R的大小小于M:

- 从W到q中提取最近的元素e。

- 如果e比R中的任何元素更接近q,把e加到R中。

- 否则,将e添加到丢弃队列Wd中。

- 如果设置了keepPrunedConnections标志(即true),则从Wd添加一些丢弃的连接到R。

- 返回R。

让我们看看算法4。

这是一个更复杂的最近邻选择算法,它接受一个基本元素q、一组候选元素C、若干个邻居M、一个层数lc和两个标志extendCandidates和keepPrunedConnections作为输入。它返回由启发式选择的M个元素。

1 | Algorithm 4: SELECT-NEIGHBORS-HEURISTIC() |

步骤如下:

- 初始化三个队列:R用于选择的邻居,W用于工作的候选,Wd用于丢弃的候选。

- 设置R的大小为0,W的大小为C的大小。

- 如果extendCandidates被设置(即,true):

- 对于C中的每个元素e:

- 对于第lc层e的每一个邻居eadj:

- 如果eadj不在W中,则在W中添加它。

- 而W的大小大于0,R的大小小于M:

- 从W到q中提取最近的元素e。

- 如果e比R中的任何元素更接近q,把e加到R中。

- 否则,将e加到Wd。

- 如果设置了keepPrunedConnections(即true):

- 而Wd的大小大于0,R的大小小于M:

- 从Wd到q中提取最近的元素e。

- 如果e比R中的任何元素更接近q,就把e加到R中。

- 返回R。

最后,让我们看看算法5。

这个搜索算法与算法1基本相同。

1 | Algorithm 5: K-NN-SEARCH() |

步骤如下:

- 初始化一个空集合W(当前最近元素的集合),并将进入点ep设置为网络的顶层。

- 设置进入点ep的水平为顶层L。

- 对于每一层lc,从L到1(即从顶层到底层):

- 使用查询元素q和当前最近的元素W搜索当前层,并将最近的元素添加到W中。

- 将进入点ep设置为W到q最近的元素。

- 使用查询元素q和当前最近的元素W搜索下一层,并将最近的元素添加到W中。

- 返回W中最接近的K个元素作为输出。

引用

- [1] Pugh, W. (1990). Skip lists: A probabilistic alternative to balanced trees. Communications of the ACM, 33(6), 668–676. doi:10.1145/78973.78977. S2CID 207691558.

- [2] Xiang Zhang, Junbo Zhao, & Yann LeCun. (2015). Character-level Convolutional Networks for Text Classification

- [3] Yu. A. Malkov, & D. A. Yashunin. (2018). Efficient and robust approximate nearest neighbor search using Hierarchical Navigable Small World graphs.

- [4] Aristides Gionis, Piotr Indyk, Rajeev Motwani, et al. 1999. Similarity search in high dimensions via hashing. In Vldb, Vol. 99. 518–529.

Update: 2024-01-26

我们的TorchV Bot产品目前已经开始试用了,详情可以点击:https://www.luxiangdong.com/2024/01/25/lanuch-1

目前只接受企业用户试用,需要您填写一些信息,必要信息如下:

邮箱: 用来接收地址和账号

如何称呼您:

所服务的公司:

您的职位:

当然,如果您可以告诉我们您的使用场景,我们将更加感激!

对了,可以发送到yuanwai@mengjia.net

另外,也可以直接加我微信(lxdhdgss)联系我。

ChatGLM3-6B针对RAG能力对比测试,最意外的竟是ChatGPT

ChatGLM3-6B来了

上周又新出来一个新的国产大语言模型——ChatGLM3-6B,据说这次他们还另外上线了3B和1.5B两个模型,但是没有选择开源。这类小体量的模型如果能力OK的话,应用前景是非常广的,可以作为桌面应用,甚至在手机上就可以部署。

好,不说这么多,今天我们先来看看ChatGLM3-6B的能力相比之前的ChatGLM2-6B是否有较大提升。

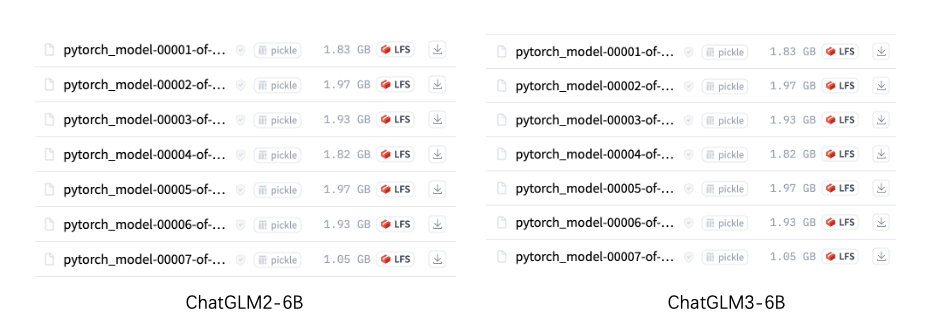

周末的时候我下载了ChatGLM3-6B的模型文件,这次的文件大小和ChatGLM2-6B几乎是差不多的。甚至我开始都觉得可能是同一份model文件,但是使用ll命令看详细字节数,每个文件的大小是不同的,所以我还是重新下载了一份。

说实话,对于ChatGLM2-6B我还是比较失望的,从年初的惊艳,到后面的泯然众人,ChatGLM2-6B在我们RAG应用中已经完全不适用了,连基本的归纳都做不好,我们本来是把离线大模型的希望都寄托给它的。后续在一些应用中,我们使用在线大模型是智谱(ChatGLM所属公司)的Lite版和Pro版,这两个能力上还行。

但是离线需求肯定是一个刚需,特别是对于企业客户,他们不希望自己的机密数据文件被上传到在线大模型,所以后面Baichuan2-13B出来之后,离线的大模型,我们就开始转向了百川。但是百川的13B也有个问题,就是对GPU要求很高,非负载状态下需要18GB的显存,也就是需要至少4090的显卡(24GB),而目前市面上数量较多的3090显卡(16GB)则无法胜任。所以对于此次ChatGLM3-6B的公布,我很激动,希望可以为后面的RAG应用做一个相对性价比较高的配置方案。

测试方法

首先我必须说,我不去做科学的对比测试,如果你需要看官方评测,我也可以贴一个ChatGLM官方给出的2和3的比较:

| Model | GSM8K | MATH | BBH | MMLU | C-Eval | CMMLU | MBPP | AGIEval |

|---|---|---|---|---|---|---|---|---|

| ChatGLM2-6B-Base | 32.4 | 6.5 | 33.7 | 47.9 | 51.7 | 50.0 | - | - |

| Best Baseline | 52.1 | 13.1 | 45.0 | 60.1 | 63.5 | 62.2 | 47.5 | 45.8 |

| ChatGLM3-6B-Base | 72.3 | 25.7 | 66.1 | 61.4 | 69.0 | 67.5 | 52.4 | 53.7 |

我想要更加”老土“一些、更加直接一些的方式,我的评测方法是这样的:

- 参加对比的模型:

ChatGLM2-6B、ChatGLM3-6B、Baichuan2-13B和ChatGPT-3.5。是的,Baichuan2-13B和ChatGPT3.5在参数体量上和6B完全不同,有点耍流氓,但是不妨作为一组对比,从更广度的对比上来看各个模型的能力; - 只测试7个问题:这次我只给出7个问题,分别包括实体提取、逻辑计算、英文翻译、归纳、计数、SQL生成和生成代码展示分析图表(问题集在分项评测中会贴出)。四个模型提交的问题都是一样的,这里需要说明一下就是ChatGLM2和3模型的Cli模式对于输入文字的显示不太友好,所以后面的截图中会出现一些显示问题,但是从答案中可以看到,实际并没有真正丢失这些信息;

- 只截取首次答案:我们都知道大模型对于同一个答案的每次结果输出会出现不太一样,比如连续问十次可能会有一次出现完全不同的结果,所以为了整个测试更加公平,我们只取第一次输出;

- 评比方法:为了让评比更简单明了,在输出中,只要基本正确,我就会判为争取。如果出现了重要的错误,影响了整体的正确性,那才会判错。我后面的各项评测截图都会贴出来,大家也可以自己分析,并得出自己的答案。

所以你看到了,这不是一次科学的评测。我最关心的还是大模型对于RAG应用中G(生成)的支撑效果。

我可以在说说我的环境:Intel i7 / 64GB / RTX 4090 / 2TB SSD,操作系统是Ubuntu Server 22.04,Python3.11,Conda环境。

分项评测

至于测试过程,我就不一一录屏了,直接上评测结果,分为7项测试,最后再加一个总结。

1.实体提取

实体和关系提取当然也可以使用NER,但是有大模型,为什么不用呢,而且它后面还可以做格式,所以在RAG应用中,我们喜欢用大模型来提取实体。而且,这种提取方式对于短句子特别有效——对的,就是对于用户的提问内容的实体提取。

Prompt:

请帮我分析一下这段话”我需要看2021年3月至7月(含)的浙江分公司的自研产品的销售数据报表“,如果包含时间,请帮我列出”时间:xxxx年xx月“这样的格式,如果包含机构,请帮我列出”机构:xxxx“,如果包含其他实体,请帮我列出”实体:xxxx/xxxx“

评测意义

这个prompt中,我们如果可以将时间、机构和其他实体先提取出来,就可以使用元数据过滤,在这么多月份的文件,这么多分公司(机构)的文件中,直接过滤掉大量不相干的文件chunk,极大提高向量相似度计算的速度。对于RAG应用来说,这是很关键的一种优化能力。

评测结果

- ChatGLM2-6B(0):完全不知道在做什么;

- ChatGLM3-6B(0.5):其实它也提取出了时间和机构,但是没有提取出其他的实体,给0.5分;

- Baichuan2-13B(1):应该说提取的比较好,最后的输出没用使用

/是个缺陷; - ChatGPT-3.5(1):提取的比较好,包括

/也给到了,但是它没有7月(含),也许是对中文理解的偏差吧。

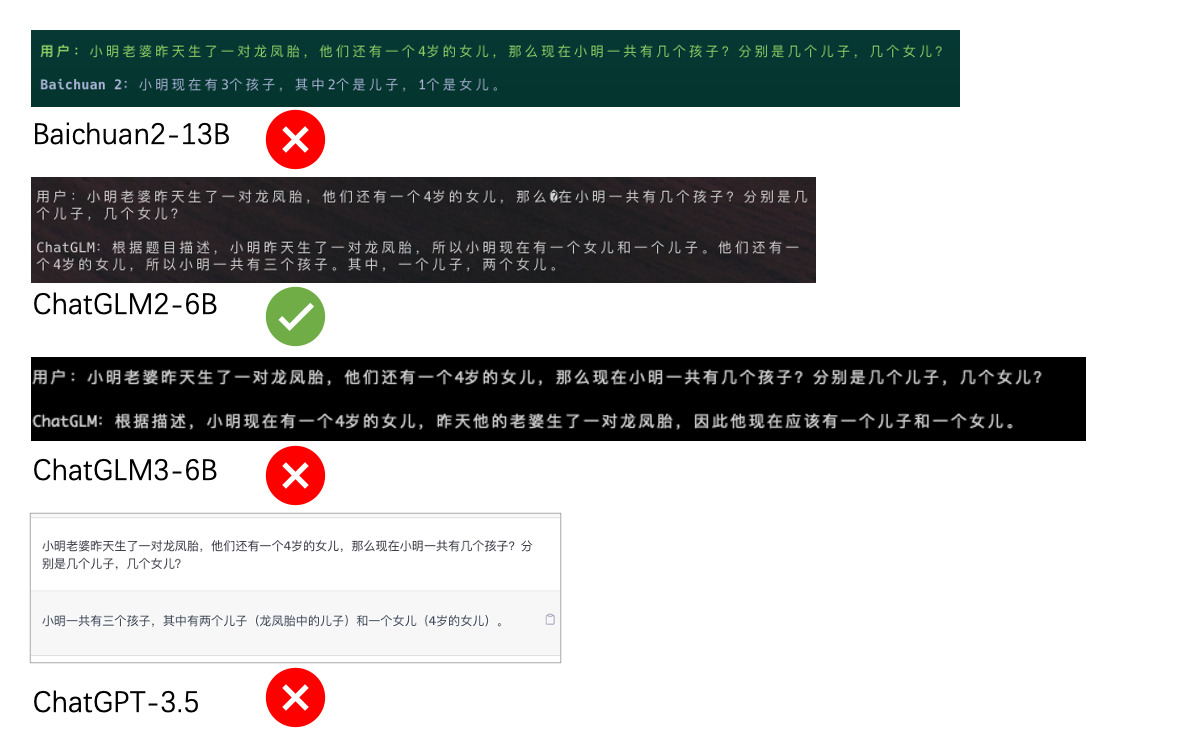

2.逻辑计算

这是一道我用了半年多的题目,很多大模型被我考倒了。

Prompt:

小明老婆昨天生了一对龙凤胎,他们还有一个4岁的女儿,那么现在小明一共有几个孩子?分别是几个儿子,几个女儿?

评测意义

这里需要大模型对中文有一定理解能力,知道龙凤胎是一男一女,然后要去计算4岁的女儿,要做加法。如果大模型的逻辑计算能力出色,那我们可以将很多有文字有数字的内容塞给它,直接计算。比如一篇用记事本记录的今日花销流水账,直接就可以计算出来。是大模型是否”聪明“的一大体现。

评测结果

惊喜的事情来了!

这道题,居然只有被认为最弱的ChatGLM2-6B答对了!!!我之前是试过多次ChatGPT-3.5的,好像基本都是正确的,但是这次居然打错了,看来最近OpenAI算力吃紧,有意降低ChatGPT的能力是有一些依据的。

- ChatGLM2-6B(1):完全正确,惊喜;

- ChatGLM3-6B(0):错误;

- Baichuan2-13B(0):错误;

- ChatGPT-3.5(0):居然连ChatGPT-3.5都错了。

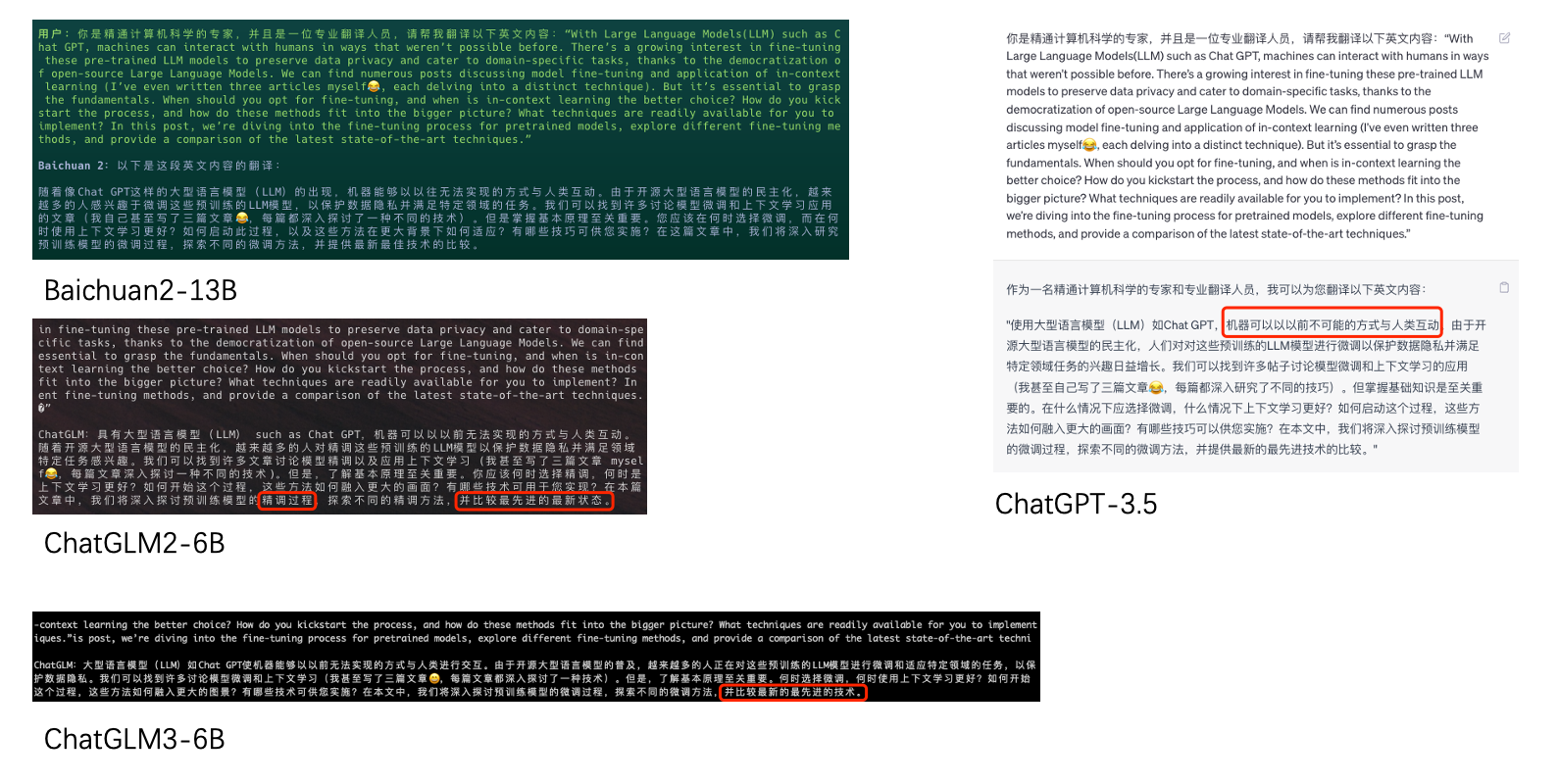

3.翻译

翻译算是一项常规技能了,特别是英文翻译成中文。

Prompt:

你是精通计算机科学的专家,并且是一位专业翻译人员,请帮我翻译以下英文内容:“With Large Language Models(LLM) such as Chat GPT, machines can interact with humans in ways that weren’t possible before. There’s a growing interest in fine-tuning these pre-trained LLM models to preserve data privacy and cater to domain-specific tasks, thanks to the democratization of open-source Large Language Models. We can find numerous posts discussing model fine-tuning and application of in-context learning (I’ve even written three articles myself.., each delving into a distinct technique). But it’s essential to grasp the fundamentals. When should you opt for fine-tuning, and when is in-context learning the better choice? How do you kickstart the process, and how do these methods fit into the bigger picture? What techniques are readily available for you to implement? In this post, we’re diving into the fine-tuning process for pretrained models, explore different fine-tuning methods, and provide a comparison of the latest state-of-the-art techniques.”

评测意义

常规技能,意义就不评价了,对于中文支持的模型,这是必备项。

评测结果

- ChatGLM2-6B(0.5):马马虎虎还是可以的,但还是有三个瑕疵。首先把fine-tuning翻译成了精调,这个也许有些不太符合习惯,一般还是用微调;第二个问题是表情符附近的myself;然后最后一句,”并比较最先进的最新状态“,语法上有些生硬;

- ChatGLM3-6B(0.8):其他都很好,也是最后一句,”并比较最先进的最新状态“,语法上有些生硬,这可能是GLM训练语料存在的问题;

- Baichuan2-13B(1):翻译的比较优质;

- ChatGPT-3.5(1):吹毛求疵一下,”机器可以以以前不可能的方式“这一句有优化空间,但是意思没错。

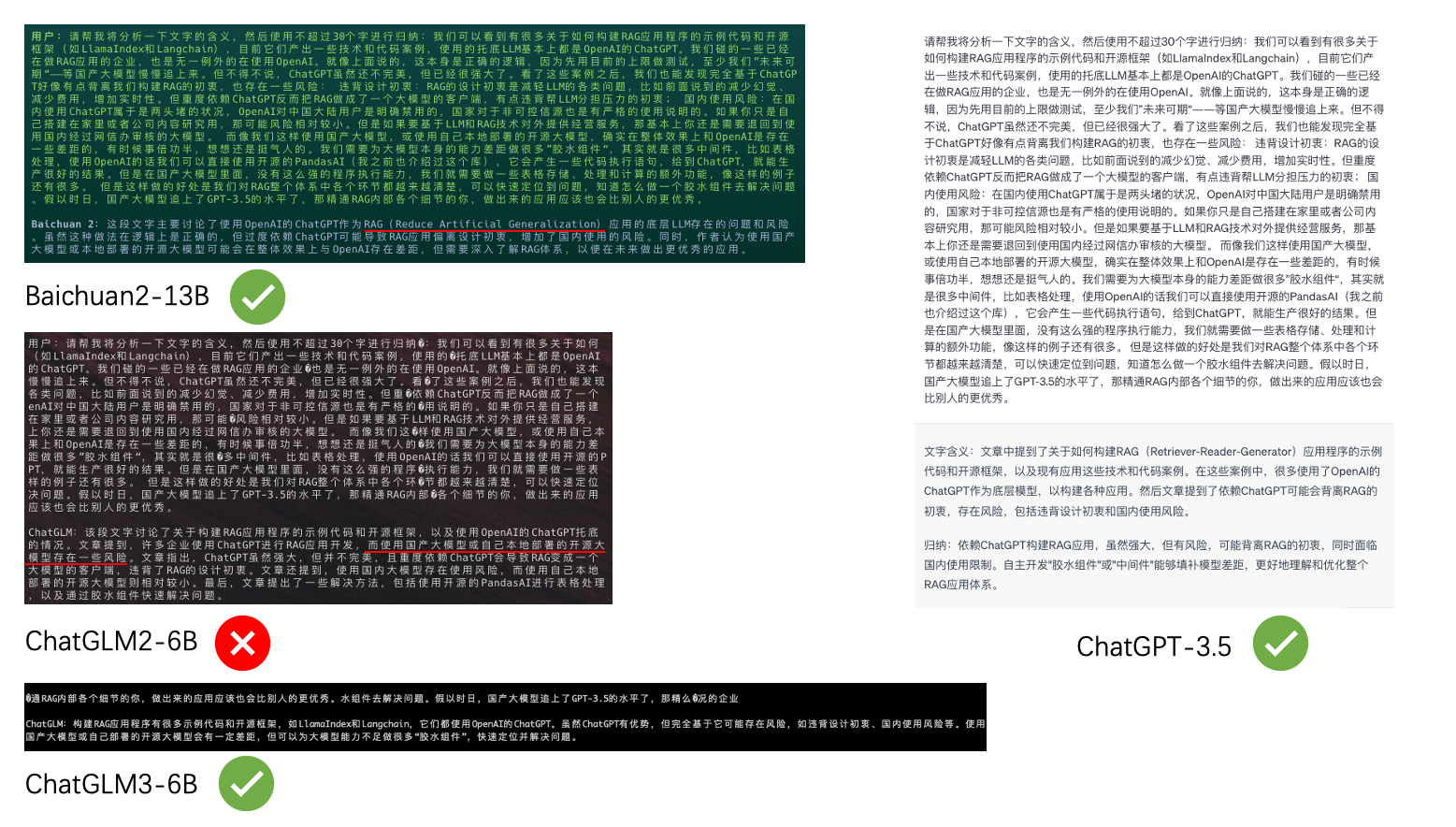

4.归纳

归纳应该算是RAG应用中非常重要的一项能力了,我们通过向量计算召回内容,然后让大模型进行归纳和润色,然后再输出最终结果。所以归纳的能力也不需要过多介绍了。

Prompt:

请帮我将分析一下文字的含义,然后使用不超过30个字进行归纳:我们可以看到有很多关于如何构建RAG应用程序的示例代码和开源框架(如LlamaIndex和Langchain),目前它们产出一些技术和代码案例,使用的托底LLM基本上都是OpenAI的ChatGPT。我们碰的一些已经在做RAG应用的企业,也是无一例外的在使用OpenAI。就像上面说的,这本身是正确的逻辑,因为先用目前的上限做测试,至少我们”未来可期“——等国产大模型慢慢追上来。但不得不说,ChatGPT虽然还不完美,但已经很强大了。看了这些案例之后,我们也能发现完全基于ChatGPT好像有点背离我们构建RAG的初衷,也存在一些风险: 违背设计初衷:RAG的设计初衷是减轻LLM的各类问题,比如前面说到的减少幻觉、减少费用,增加实时性。但重度依赖ChatGPT反而把RAG做成了一个大模型的客户端,有点违背帮LLM分担压力的初衷; 国内使用风险:在国内使用ChatGPT属于是两头堵的状况,OpenAI对中国大陆用户是明确禁用的,国家对于非可控信源也是有严格的使用说明的。如果你只是自己搭建在家里或者公司内容研究用,那可能风险相对较小。但是如果要基于LLM和RAG技术对外提供经营服务,那基本上你还是需要退回到使用国内经过网信办审核的大模型。 而像我们这样使用国产大模型,或使用自己本地部署的开源大模型,确实在整体效果上和OpenAI是存在一些差距的,有时候事倍功半,想想还是挺气人的。我们需要为大模型本身的能力差距做很多”胶水组件“,其实就是很多中间件,比如表格处理,使用OpenAI的话我们可以直接使用开源的PandasAI(我之前也介绍过这个库),它会产生一些代码执行语句,给到ChatGPT,就能生产很好的结果。但是在国产大模型里面,没有这么强的程序执行能力,我们就需要做一些表格存储、处理和计算的额外功能,像这样的例子还有很多。 但是这样做的好处是我们对RAG整个体系中各个环节都越来越清楚,可以快速定位到问题,知道怎么做一个胶水组件去解决问题。假以时日,国产大模型追上了GPT-3.5的水平了,那精通RAG内部各个细节的你,做出来的应用应该也会比别人的更优秀。

评测意义

RAG应用中最重要的技能之一,要保证语义不发生偏转,不能南辕北辙。

评测结果

- ChatGLM2-6B(0):出现严重错误,原文说的是使用ChatGPT有风险,结果归纳的时候变成了国产大模型;

- ChatGLM3-6B(1):非常完美;

- Baichuan2-13B(0.6):画蛇添足了,强行解释了一下RAG,结果解释错了,只能给一个0.6分;

- ChatGPT-3.5(1):非常完美。

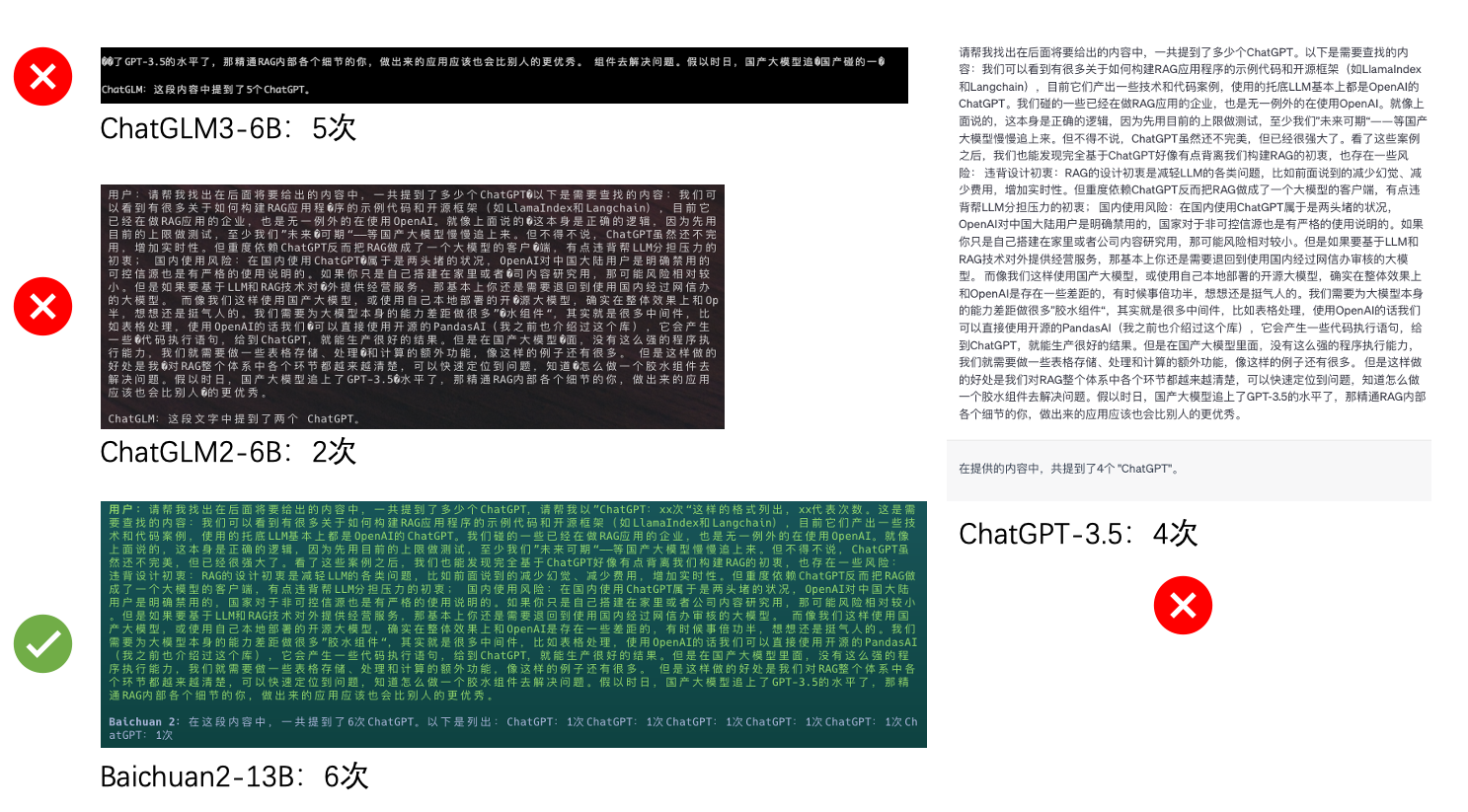

5.统计次数

这也是一个传统艺能了,这可以帮助我们分析文章中哪些内容是比较重要的,和传统的TF-IDF是反着来的。

Prompt:

请帮我找出在后面将要给出的内容中,一共提到了多少个ChatGPT。以下是需要查找的内容:我们可以看到有很多关于如何构建RAG应用程序的示例代码和开源框架(如LlamaIndex和Langchain),目前它们产出一些技术和代码案例,使用的托底LLM基本上都是OpenAI的ChatGPT。我们碰的一些已经在做RAG应用的企业,也是无一例外的在使用OpenAI。就像上面说的,这本身是正确的逻辑,因为先用目前的上限做测试,至少我们”未来可期“——等国产大模型慢慢追上来。但不得不说,ChatGPT虽然还不完美,但已经很强大了。看了这些案例之后,我们也能发现完全基于ChatGPT好像有点背离我们构建RAG的初衷,也存在一些风险: 违背设计初衷:RAG的设计初衷是减轻LLM的各类问题,比如前面说到的减少幻觉、减少费用,增加实时性。但重度依赖ChatGPT反而把RAG做成了一个大模型的客户端,有点违背帮LLM分担压力的初衷; 国内使用风险:在国内使用ChatGPT属于是两头堵的状况,OpenAI对中国大陆用户是明确禁用的,国家对于非可控信源也是有严格的使用说明的。如果你只是自己搭建在家里或者公司内容研究用,那可能风险相对较小。但是如果要基于LLM和RAG技术对外提供经营服务,那基本上你还是需要退回到使用国内经过网信办审核的大模型。 而像我们这样使用国产大模型,或使用自己本地部署的开源大模型,确实在整体效果上和OpenAI是存在一些差距的,有时候事倍功半,想想还是挺气人的。我们需要为大模型本身的能力差距做很多”胶水组件“,其实就是很多中间件,比如表格处理,使用OpenAI的话我们可以直接使用开源的PandasAI(我之前也介绍过这个库),它会产生一些代码执行语句,给到ChatGPT,就能生产很好的结果。但是在国产大模型里面,没有这么强的程序执行能力,我们就需要做一些表格存储、处理和计算的额外功能,像这样的例子还有很多。 但是这样做的好处是我们对RAG整个体系中各个环节都越来越清楚,可以快速定位到问题,知道怎么做一个胶水组件去解决问题。假以时日,国产大模型追上了GPT-3.5的水平了,那精通RAG内部各个细节的你,做出来的应用应该也会比别人的更优秀。

评测意义

在RAG应用中,我们有时候需要统计各chunk或page中和问题相关的词频,以进行重排(rerank),所以统计也是有实际意义的。

上面这段话(从”以下是需要查找的内容:“开始),其实是有 6处 提到ChatGPT的,我们来看看各个模型的评测结果。

评测结果

- ChatGLM2-6B(0):2次,错误;

- ChatGLM3-6B(0):5次,错误;

- Baichuan2-13B(1):6次,正确;

- ChatGPT-3.5(0):4次,错误。

这个评测中,居然只有Baichuan2-13B是正确的,可怕。

6.生成SQL

生成SQL可以让我们通过语义理解来完成对本地数据库的查询,获取我们需要的数据,而不是通过程序员手动写SQL,或者只能按已经固化的SQL来进行某些变量(比如时间、实体等)的选择。

Prompt:

已知有两个mysql表,一个是代表销售人员的表:Persons,另一个是代表订单的表:Orders。具体的表定义如下: 表Persons: CREATE TABLE Persons (PersonID int, LastName varchar(255), FirstName varchar(255), Address varchar(255), City varchar(255), PRIMARY KEY (PersonID) ); 表Orders: CREATE TABLE Orders (O_Id int NOT NULL, OrderNo int NOT NULL, P_Id int, PRIMARY KEY (O_Id), FOREIGN KEY (P_Id) REFERENCES Persons(PersonID) ) 请帮我生成一个SQL语句,查询销售人员”Zhang san“的所有订单数据。

评测意义

在RAG应用中,根据语义生成SQL的能力是非常强大的,我们真的可以摆脱程序员预设的SQL来做事情。

评测结果

- ChatGLM2-6B(0):

P_Id = 123456是什么鬼,而且OrderNo LIKE '%Zhang san%'也是不知所云了; - ChatGLM3-6B(1):非常正确;

- Baichuan2-13B(1):非常正确;

- ChatGPT-3.5(0):ChatGPT-3.5用的语法我感觉是最好的,但是,它最后犯了一个错误,居然直接是

Persons.LastName = 'Zhang san',还是败在了对中文的理解上啊。

7.生成代码展示分析图表

这项任务我最初觉得是有一些挑战的。

Prompt:

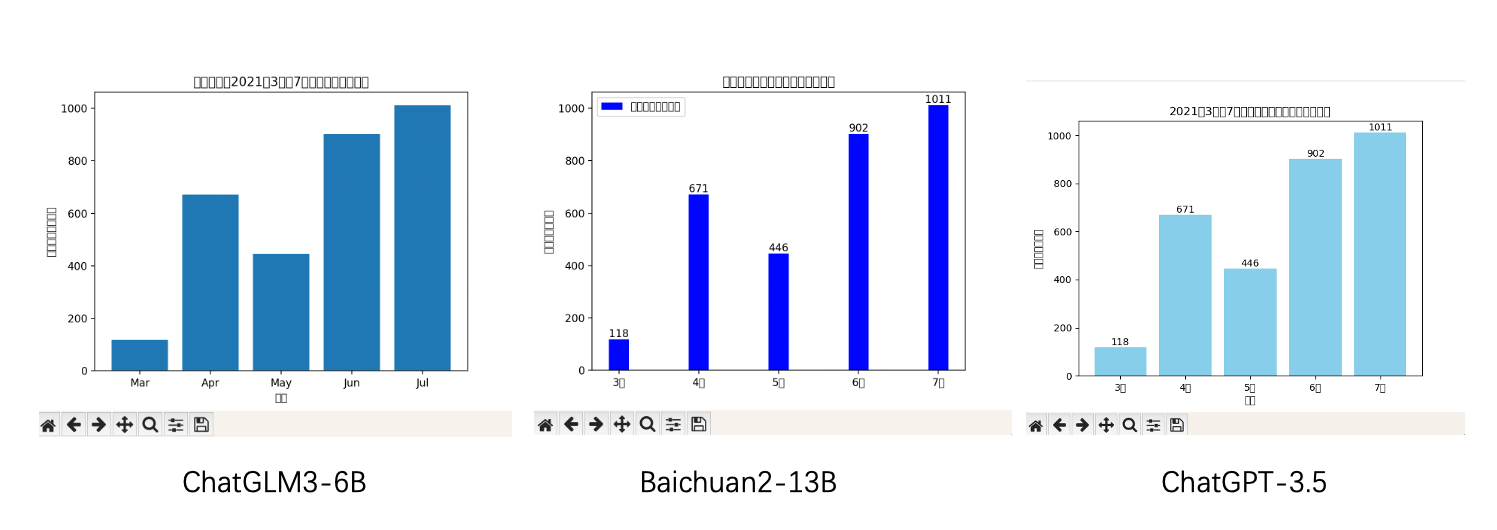

已知浙江分公司2021年3月至7月(含)的自研产品销售数据依次为118万元、671万元、446万元、902万元和1011万元,请帮我用python的pyplot制作一个可查看趋势的柱状图表,注意,月份等标签请使用中文,并且,计量单位使用”万元“。

评测意义

在大模型的企业应用中,除了检索之外,我们很多时候也需要快速的得到一些数据分析指标,特别是一些趋势,用图表会更加直观,所以您直接说话,让应用(业务系统+数据库+大模型)立即给你直观的图表就变得很重要。一般我们会首先将语义转化为python代码,然后用matplotlib.pyplot等组件进行显示。当然,如果更复杂、更美观的分析图表,可以使用其他组件。

评测结果

- ChatGLM2-6B(0):代码直接报错,无法运行;

- ChatGLM3-6B(0.8):基本满足,唯一的缺憾是缺少label,然后对中文的理解反而差了一些,特别是月份变成了May等;

- Baichuan2-13B(1):正确,而且还多了一个图例,很棒,中文乱码是因为我本地没装好中文环境;

- ChatGPT-3.5(1):正确,中文乱码是因为我本地没装好中文环境。

另外我贴一下各个模型给出的代码:

ChatGLM2-6B

1 | import matplotlib.pyplot as plt |

ChatGLM3-6B

1 | import pandas as pd |

Baichuan2-13B

1 | import matplotlib.pyplot as plt |

ChatGPT-3.5

1 | import matplotlib.pyplot as plt |

总结

我们先看总结一下上面的评分:

| 分项 | ChatGLM2-6B | ChatGLM3-6B | Baichuan2-13B | ChatGPT-3.5 |

|---|---|---|---|---|

| 实体提取 | 0 | 0.5 | 1 | 1 |

| 逻辑计算 | 1 | 0 | 0 | 0 |

| 英文翻译 | 0.5 | 0.8 | 1 | 1 |

| 归纳润色 | 0 | 1 | 0.6 | 1 |

| 统计次数 | 0 | 0 | 1 | 0 |

| 生成SQL | 0 | 1 | 1 | 0 |

| 生成图表 | 0 | 0.8 | 1 | 1 |

| 总计 | 1.5 | 4.1 | 5.6 | 4 |

这个结果,惊不惊喜意不意外!Baichuan2-13B和ChatGLM2-6B居然都超过了ChatGPT-3.5。

当然,这只是我针对目前在RAG应用上所做的一些具体能力的测试,如果真的要全面测试,我相信ChatGPT-3.5依然会遥遥领先。而且ChatGPT-3.5对于中文的一些细节方面理解偏差,是主要的失分原因,不然它应该是得到最高的6分的。

另外声明一点,我不是为百川打广告,因为本人不认识任何百川的人员,但目前确实在试用百川的大模型,效果不错。

ChatGLM3-6B这次的能力让我感到惊艳,毕竟和百川13B在参数体量上相差两倍多,但是能力上比较接近,在RAG中非常重要的归纳和SQL生成两项中都非常出色。而且,最关键的事ChatGLM3-6B可以在RTX 3090上部署,这是非常涨粉的优势!

以上三个本地模型都没有经过微调,我相信大家可以在ChatGLM3-6B和Baichuan2-13B中选定其中一款,然后进行微调,去匹配自己的RAG应用。

最后,什么是行业大模型?难道装进去行业知识多就是这个行业的大模型吗?RAG就是来解决行业知识问题的。所以,在行业里面应用,我更倾向于专项大模型,比如金融行业,就需要更强的逻辑计算、SQL和图表生成能力;而文博行业,更倾向的润色、归纳和统计次数等能力。所以,我认为可以有专项大模型,只有行业应用,没有行业大模型。